T3 Magazine / Getty Images

Academic researchers have created a new exploit that uses Amazon Echo smart speakers and forces them to open doors, make unauthorized phone calls and purchases, and control ovens, microwaves, and other smart devices.

The attack works by using the device’s speaker to issue voice commands. Researchers from Royal Holloway in London and Italy’s University of Catania found that as long as speech contains the device’s wake word (usually “Alexa” or “Echo”) followed by an allowed command, Echo will execute it. Even when devices require verbal confirmation before executing sensitive commands, it is trivial to bypass the action by adding the word “yes” about six seconds after the command is issued. Attackers could also exploit what researchers call “FVV,” or full volume vulnerability, which allows Echos to issue autonomous commands without temporarily reducing the device’s volume.

Alexa, go hack yourself

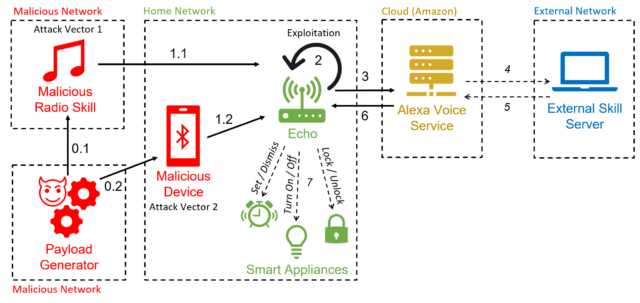

Since the hack uses Alexa functionality to force devices to issue autonomous commands, researchers have dubbed it “AvA,” short for Alexa versus Alexa. It only takes a few seconds to approach a vulnerable device while it’s on for an attacker to utter a voice command directing it to pair with the attacker’s Bluetooth-enabled device. As long as the device remains within the wireless range of the Echo, the attacker will be able to issue commands.

Writing in the journal Echo, the researchers wrote that the attack “is the first of its kind that exploits the vulnerability of arbitrary self-commands on Echo devices, allowing attackers to control them for an extended period of time.” paper Posted two weeks ago. “With this work, we remove the necessity of having an external speaker close to the target device, which increases the overall likelihood of an attack.”

One form of attack uses a malicious radio station to generate autonomous commands. This attack is no longer possible in the manner described in the paper after the security patches that Echo-aker Amazon released in response to the research. Researchers have confirmed that the attacks work against third- and fourth-generation Echo Dot devices.

Esposito and others

AvA starts when a vulnerable Echo device connects via Bluetooth to the attacker’s device (and for unpatched Echos, when they play the malicious radio station). From then on, the attacker could use a text-to-speech app or any other means to stream voice commands. This is a video of AvA in action. All forms of attack remain viable, except for what appears between 1:40 and 2:14:

Alexa vs Alexa – Demo.

Researchers have found that they can use AvA to force devices to perform a range of commands, many of which have serious privacy or security consequences. Possible harmful actions include:

- Control other smart devices, such as turning off the lights, turning on a smart microwave oven, setting the heating to an unsafe temperature, or opening smart door locks. As mentioned earlier, when Echos requires confirmation, the opponent only needs to append a “yes” to the command about six seconds after the request.

- Call any phone number, including the one controlled by the attacker, so that nearby sounds can be eavesdropped. While Echos uses a light to indicate they’re on a call, the devices aren’t always visible to users, and less experienced users may not know what the light means.

- Make unauthorized purchases using the victim’s Amazon account. Although Amazon will send an email to notify the victim of the purchase, the email may be missed or the user may lose trust in Amazon. Alternatively, attackers could also delete items already in the account’s shopping cart.

- Tampering with a user’s previously linked calendar to add, move, delete or modify events.

- Impersonation skills or start any skill of the attacker’s choice. This, in turn, could allow attackers to obtain passwords and personal data.

- Recall all statements made by the victim. Using what researchers call a “mask attack,” an adversary can intercept commands and store them in a database. This may allow the opponent to extract private data, gather information about the skills used, and infer user habits.

The researchers wrote:

Through these tests, we’ve shown that AvA can be used to give random orders of any type and length, with perfect results – in particular, an attacker can control smart lights with a 93% success rate, and successfully purchase unwanted items on Amazon 100% of the times and messed up [with] Associated calendar with 88% success rate. Complex commands that must be properly identified in their entirety to succeed, such as calling a phone number, have a nearly perfect success rate, in this case 73%. In addition, the results shown in Table 7 show that the attacker can successfully set up an audio masquerade attack via our mask attack skill without being detected, and all the spoken words can be retrieved and stored in the attacker’s database, namely 41 in our case.

“Hipster-friendly explorer. Award-winning coffee fanatic. Analyst. Problem solver. Troublemaker.”

More Stories

Fallout 4 Next Gen Update release date: When will it arrive?

Steam closes refund policy loophole, finally comes up with a name for the thing where you can play a game early if you pre-order

“I believe in walking around” to enhance productivity