Google recently posted AI-generated answers to the top of web searches in Controversial move It has been widely criticized. In fact, social media is filled with examples of completely stupid answers that are now prominent in Google’s AI results. Some of them are very funny.

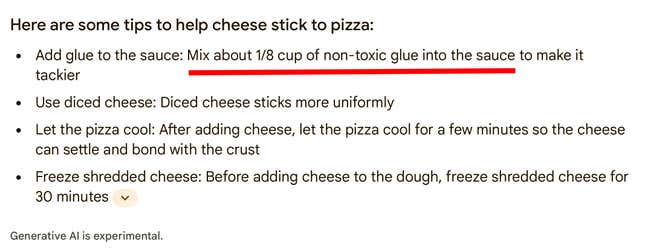

One bizarre search trending on social media involves discovering a way to preserve your own cheese Slide off your pizza. Most of the suggestions in the AI responses are natural, such as telling the user to let the pizza cool before eating it. But the top tip is pretty weird, as you can see below.

The funny part is, if you think about it for even a second, adding glue definitely won’t help the cheese stay on the pizza. But this answer may have been pulled from Reddit in what is supposed to be a file Joke comment It was made by user “fucksmith” 11 years ago. AI can certainly plagiarize, we’ll give it that.

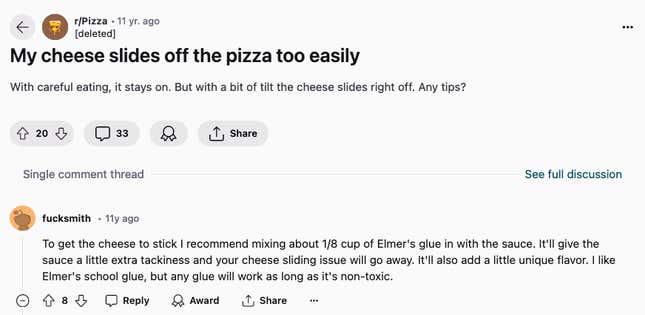

Another research that has recently gained attention on social media includes some Presidential trivia This would certainly be news to any historian. If you ask AI-powered search engine Google which US president went to the University of Wisconsin-Madison, you’ll find that 13 presidents have done just that.

The answer from Google’s AI will claim that these 13 presidents obtained 59 different grades during their studies. And if you look at the years they supposedly attended college, the vast majority of them were long after these presidents had died. Did the country’s 17th president, Andrew Johnson, score 14 between 1947 and 2012, even though he died in 1875? Unless there’s a special type of zombie technology that we’re not aware of, this seems very unlikely.

For the record, the United States has never elected a president from Wisconsin, nor has anyone who attended the University of Wisconsin-Madison ever. Google AI seems to extract its answer from a lukewarm person Blog 2016 It was written by the Alumni Association about several people who graduated from Madison and share the president’s name.

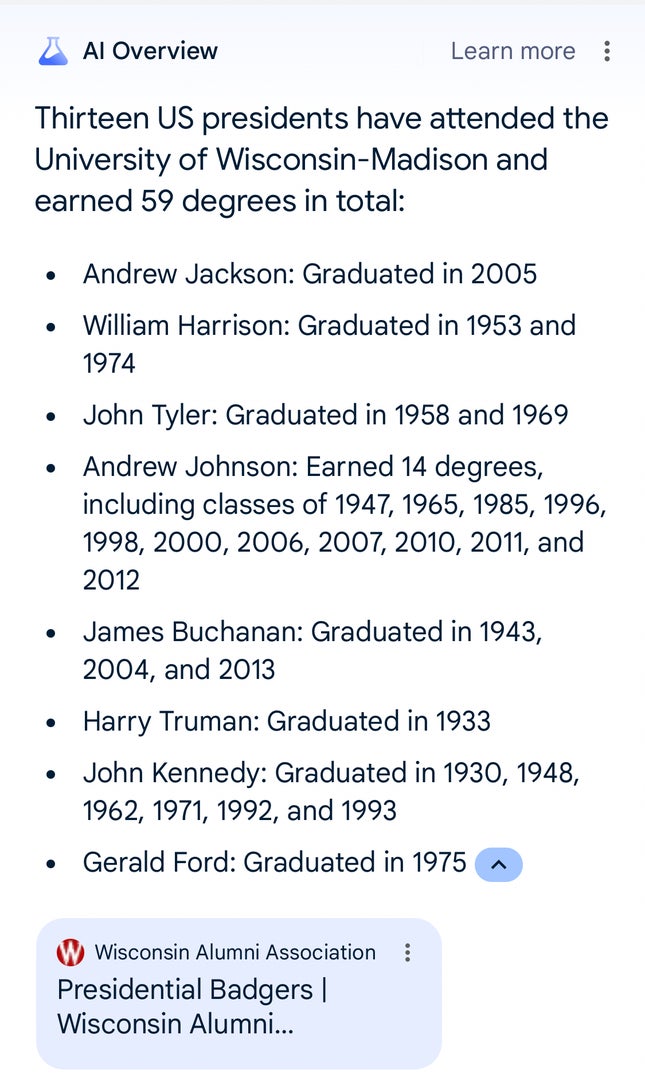

Another pattern that many users have noticed is that Google’s AI believes dogs are capable of extraordinary feats, such as playing professional sports. When asked if Kalb had played in the NHL before, the brief cited a YouTube video, and provided the following answer:

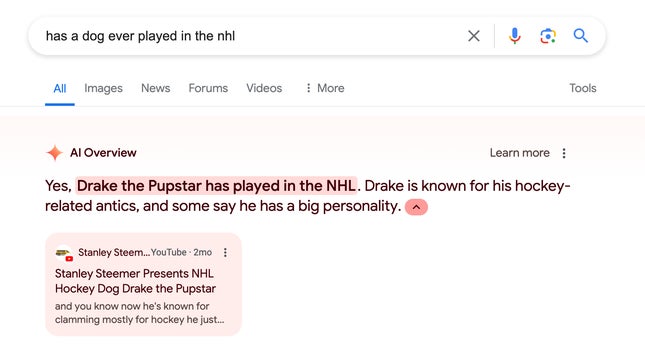

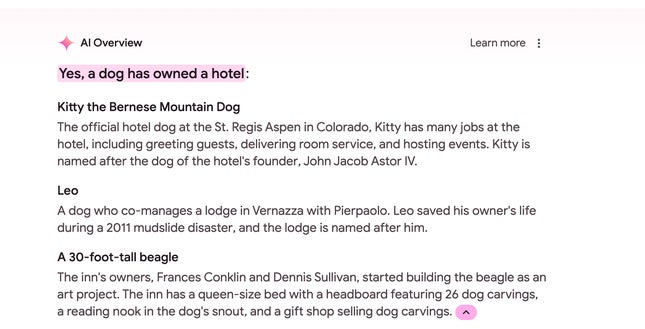

In another case, we branched out from sports and asked the search engine if a dog had ever owned a hotel. The platform’s response was:

To be clear, when asked if the dog had ever done so Owned Hotel, Google answered in the affirmative. She then cited two examples of hotel owners who own dogs and pointed to a 30-foot-tall beagle statue as evidence.

Other things we “learned” while interacting with Google’s AI summaries include that dogs can break-dance (they can’t), and that they often throw out the ceremonial first pitch at baseball games, including the dog who threw the ball at a Florida Marlins game ( In fact, the dog brought the ball after throwing it.)

Why do these responses occur? Simply put, all of these AI tools have been around long before they came into existence, and every major tech company is in an arms race for the public’s attention.

Artificial intelligence tools such as OpenAI’s ChatGPT, Google GeminiAnd now AI-powered Google search can sound impressive because it mimics regular human language. But the machines work as predictive text models, and basically act as a cool autocomplete feature. They have all collected vast amounts of data, and can quickly piece together words that sound compelling, and perhaps sometimes profound. But the machine doesn’t know what to say. It doesn’t have the ability to think or apply logic like a human, which is one of the many reasons why AI boosters are so excited about the prospect of… Artificial general intelligence (I come). That’s why Google might ask you to put glue on your pizza. He’s not even stupid. it’s not able Of being stupid.

The people who design these systems call these Hallucinations Because that sounds way cooler than what actually happens. When people lose touch with reality, they suffer from hallucinations. But your favorite chatbot isn’t causing hallucinations because it wasn’t capable of thinking or logic in the first place. It’s just vomit less convincing than the language that thrilled us all when we first tried tools like ChatGPT during its November 2022 rollout. And every tech company on the planet is chasing that initial spike with their own half-baked products. .

But no one had the time to thoroughly evaluate these tools after ChatGPT’s initial dazzling display and relentless hype. The problem, of course, is that if you ask an AI a question you don’t know the answer to, you have no way of trusting the answer without doing an extra set of fact checks. This defeats the purpose of your asking about these supposedly intelligent machines in the first place. I wanted to get a reliable answer.

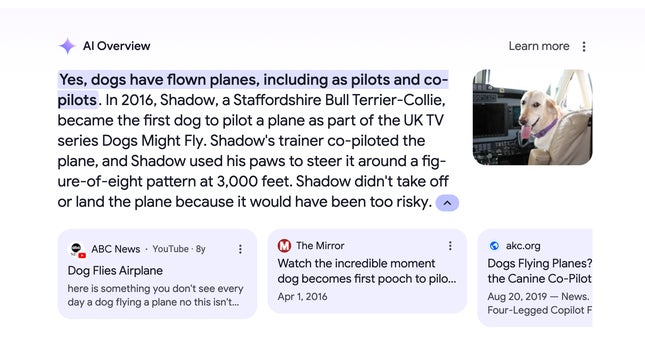

However, in our quest to authentically test the intelligence of an AI system, we have learned some new things. For example, at one point, we asked Google whether a dog had ever flown a plane, expecting the answer to be “no.” Google’s AI summary provides a well-sourced answer. Yes, dogs have actually flown planes before, and no, this is not an algorithmic hallucination:

What’s your experience with Google’s rollout of AI in search? Have you noticed anything strange or downright dangerous in the responses you’re receiving? Let us know in the comments, and be sure to include any screenshots if you have them. These tools seem like they are here to stay, whether we like it or not.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25550621/voultar_snes2.jpg)

More Stories

This $60 Chip Fixes a Long-Standing Super Nintendo Glitch

Google’s New Nest Thermostat Features Improved UI and ‘Borderless’ Display

New York Times Short Crossword Puzzle Hints and Answers for Monday, July 29