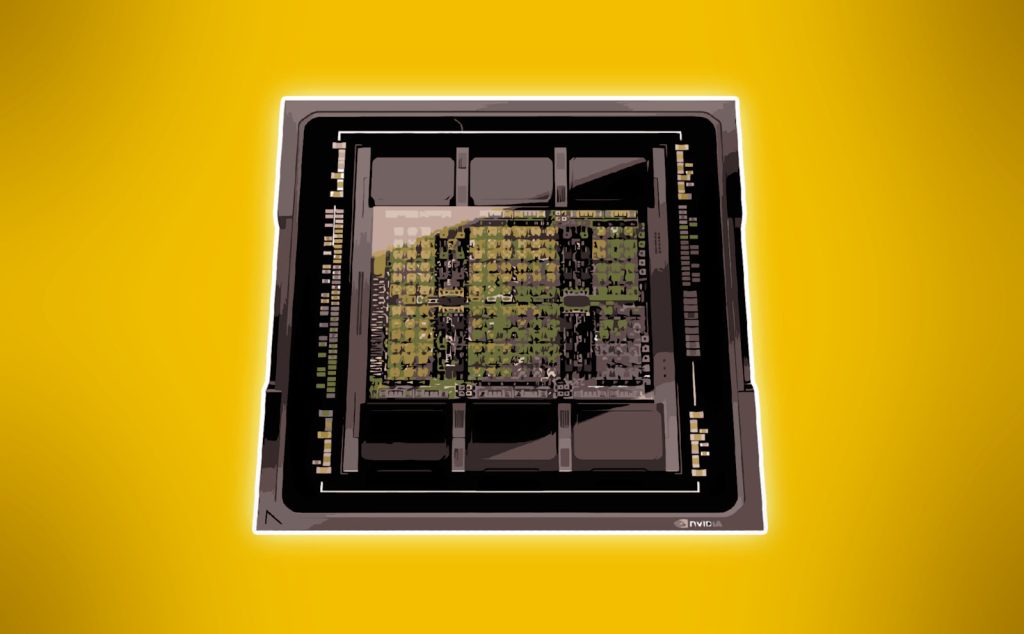

NVIDIA H100 مواصفات Specifications

Some details about NVIDIA’s next-generation AI accelerator leaked just an hour before the announcement.

Contrary to rumors that the NVIDIA H100 based on the Hopper architecture will use the TSMC N5, NVIDIA today announced that its latest accelerators will use custom TSMC N4 processing technology. Rumors were also wrong about the number of transistors. This single GPU is built with 80 billion transistors. The (low qualityy) chipsets that we haven’t mentioned the exact specifications of the Hopper GPU yet (CUDA). For those, please allow approximately an hour for NVIDIA to publish the full architecture white paper.

NVIDIA H100 Specifications, Source: VideoCardz

The NVIDIA H100 has HBM3 memory with 3TB/sec of bandwidth, 1.5 times more than the A100. In terms of performance, NVIDIA claims 3 times higher computing power in the FP64, TF32 and FP16, and 6 times higher in the FP8 than the A100.

The accelerator will use the PCIE Gen5 or SXM form factor. The latter will have a TDP of 700W, exactly 300W more than the A100.

NVIDIA Grace SuperChips Specifications, Source: VideoCardz

NVIDIA is also releasing two ARM-based Grace CPU platforms today. One features a Grace CPU integrated with a Hopper GPU featuring 600GB of memory, while the other features two Grace CPUs with a total of 144 cores. It will be equipped with LPDDR5X memory. Both Grace SuperChips will be available in the first half of 2023.

| Rumored NVIDIA Data Center GPU Specifications | ||||

|---|---|---|---|---|

| VideoCardz.com | Nvidia H100 | Nvidia A100 | Nvidia Tesla V100 | Nvidia Tesla P100 |

| picture |  |

|

|

|

| GPU | GH100 | GA100 | GV100 | GP100 |

| transistors | 80 b | 54.2 | 21.1 | 15.3 B |

| die size | TBC | 828 mm² | 815 mm² | 610 mm² |

| building | Huber | Ampere | Volta | Pascal |

| manufacturing knot | TSMC N4 | TSMC N7 | 12 nm FFN | 16nm FinFET + |

| GPU clusters | TBC | 108 | 80 | 56 |

| CUDA cores | TBC | 6912 | 5120 | 3584 |

| L2 cache | 48 megabytes | 40 MB | 6 megabytes | 4 megabytes |

| tensor core | TBC | 432 | 320 | – |

| Memory bus | 5120 bit | 5120 bit | 4096 bits | 4096 bits |

| Memory size | 80 GB HBM3 | 40/80 GB HBM2e | 16/32 HBM2 | 16 GB HBM2 |

| TDP | 700 watts | 250 W / 300 W / 400 W | 250 W / 300 W / 450 W | 250 W / 300 W |

| user interface | SXM / PCIe | SXM / PCIe | SXM / PCIe | SXM / PCIe |

| launch year | 2022 | 2020 | 2017 | 2016 |

/cdn.vox-cdn.com/uploads/chorus_asset/file/25550621/voultar_snes2.jpg)

More Stories

This $60 Chip Fixes a Long-Standing Super Nintendo Glitch

Google’s New Nest Thermostat Features Improved UI and ‘Borderless’ Display

New York Times Short Crossword Puzzle Hints and Answers for Monday, July 29